Herman Kahn is probably best known as a nuclear war theorist. In his 1960 book On Thermonuclear War, Kahn explored scenarios once deemed unthinkable, helping to shape Cold War defense policy. His work even provided inspiration—albeit exaggerated—for the eponymous character in Stanley Kubrick’s 1964 film, Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb. Kahn developed the concept of the “escalation ladder,” a framework outlining 44 rungs of potential nuclear conflict, from diplomatic posturing to full-scale war, which influenced military planning by introducing a more structured way to think about deterrence and escalation control.

Later, Kahn turned his attention to the future of economic and technological progress. It’s this second act of his career that merits his large presence in my 2023 book The Conservative Futurist: How to Create the Sci-Fi World We Were Promised. Throughout the 1970s and early 1980s, he rejected prevailing fears of overpopulation and resource depletion, arguing instead that human ingenuity would sustain growth and prosperity. His market-driven optimism made him a fitting lodestar for The Conservative Futurist, which draws heavily on Kahn’s belief that innovation, not bureaucratic planning, is the surest route to progress. Upon his death in 1983, President Ronald Reagan praised him as “a futurist who welcomed the future,” recognizing his confidence in humanity’s ability to shape its destiny.

His pro-progress, Up Wing vision is as relevant as ever. But also, it turns out, is his notion of an escalation ladder. The concept is reworked for the emerging Age of AI by the authors (including former Google CEO Eric Schmidt) of the new report, “Superintelligence Strategy” that examines the national security implication of advanced AI. From the abstract:

Rapid advances in AI are beginning to reshape national security. Destabilizing AI developments could rupture the balance of power and raise the odds of great-power conflict, while widespread proliferation of capable AI hackers and virologists would lower barriers for rogue actors to cause catastrophe. Superintelligence—AI vastly better than humans at nearly all cognitive tasks—is now anticipated by AI researchers. Just as nations once developed nuclear strategies to secure their survival, we now need a coherent superintelligence strategy to navigate a new period of transformative change. We introduce the concept of Mutual Assured AI Malfunction (MAIM): a deterrence regime resembling nuclear mutual assured destruction (MAD) where any state’s aggressive bid for unilateral AI dominance is met with preventive sabotage by rivals. Given the relative ease of sabotaging a destabilizing AI project—through interventions ranging from covert cyberattacks to potential kinetic strikes on datacenters—MAIM already describes the strategic picture AI superpowers find themselves in. Alongside this, states can increase their competitiveness by bolstering their economies and militaries through AI, and they can engage in nonproliferation to rogue actors to keep weaponizable AI capabilities out of their hands. Taken together, the three-part framework of deterrence, nonproliferation, and competitiveness outlines a robust strategy to superintelligence in the years ahead.

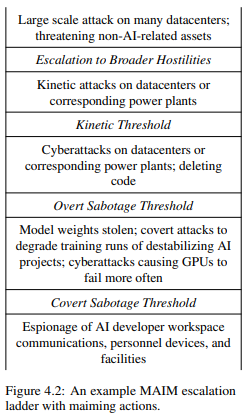

One concept explored in the paper is an AI escalation ladder that adapts Kahn’s Cold War framework to the modern challenges of AI competition and conflict between nations. Like Kahn’s original ladder, it illustrates how tensions surrounding advanced AI systems might gradually escalate—from peaceful competition through increasingly aggressive actions, possibly leading to severe geopolitical crises or open conflict. The goal is to encourage policymakers to anticipate critical thresholds and adjust to them before they spiral into serious crises or unintended conflicts. Again, from the report:

Just as nuclear rivals once mapped each rung on the path to a launch to limit misunderstandings, AI powers must clarify the escalation ladder of espionage, covert sabotage, overt cyberattacks, possible kinetic strikes, and so on. For deterrence to hold, each side’s readiness to maim must be common knowledge, ensuring that any maiming act—such as a cyberattack—cannot be misread and cause needless escalation.

I’m not a war theorist, but I think this report provides a great example of how AI is a general purpose technology that not only affects all aspects of an economy but also all aspects of public policy, including national security.

The post The Ladder of AI Escalation appeared first on American Enterprise Institute – AEI.