How long, if ever, before we achieve artificial intelligence that can pretty much do everything that a human worker can do currently? My short-hand way of gauging the speculative timeline relies, at least partially, on prediction markets. And the most recent message from them suggests tempering expectations, at least a bit.

For example: The current Metaculus consensus sees artificial general intelligence happening in November 2032 versus a forecast of March 2030 back in February. Over at Manifold, the chance of an AI-driven break in “trendline US GDP, GDP per capita, unemployment, or productivity,” has declined to 25 percent from 52 percent in January.

If you’re looking at an explanation, consider that it might be the accumulation of lots of little doubts. The new RAND Corporation report “Charting Multiple Courses to Artificial General Intelligence” outlines various reasons for skepticism that large language models will lead to AGI. The conventional wisdom holds that AGI will emerge naturally from continually enlarging language models with more parameters (the numerical values in neural networks that get adjusted during training to help models learn patterns from data), training data, and computational heft.

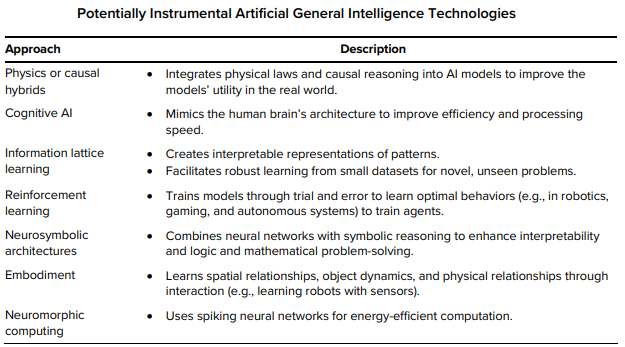

But Rand’s researchers pour cold water on this technological determinism. Such “hyperscaling,” they argue, suffers from fundamental flaws. Among them: LLMs display a peculiar confidence when wrong, akin to an articulate but unreliable expert. More troublingly, these systems demonstrate rote memorization rather than genuine reasoning, faltering when simple problems are rephrased. Practical constraints loom large: High-quality training text is finite, and energy requirements are becoming prohibitively expensive. The report advocates a portfolio approach to the AGI race against China, embracing alternative technologies—from physics-infused neural networks to brain-inspired computing—to supplement LLMs.

Even if Rand is right, there’s little reason to abandon hope for substantive economic acceleration.

As Ethan Mollick, a Wharton professor who studies AI, recently microblogged on X,

I don’t mean to be a broken record but AI development could stop at the o3/Gemini 2.5 level and we would have a decade of major changes across entire professions & industries (medicine, law, education, coding…) as we figure out how to actually use it.

Indeed, most of the rosy productivity and economic growth forecasts you’ve probably seen, especially those from Wall Street banks and business consultants, don’t assume AGI in their scenarios. Rather, they treat generative AI as an important “general purpose” technology but one we have historical experience with such as the steam engine and internet. Even so, some estimates suggest AI could potentially double productivity growth rates in the coming decade. So something more like three percent GDP growth, not 30 percent growth—but better than long-run Federal Reserve and CBO forecasts of less than two percent.

The post A World with Smart AI but Not Human-Level AI appeared first on American Enterprise Institute – AEI.